As alluded to by our previous article, Moving to Bare Metal - Prelude, this part will dive a bit deeper into the technologies we’ll be using in our bare metal cluster.

I’ve talked to a good number of developers, and when it comes to CD, all they care about is that their code ends up in production and are for the most part not interested in how it actually gets there, that being said, don’t feel discouraged reading this article, I will do my best to paint the picture in layman’s terms so hopefully you can walk away with a pretty good idea of what Kubernetes is and what you gain from using it.

So, let’s start with the biggest change that will occur in our migration, the switch from our current preferred method of hosting containers, Docker Swarm, to the new industry standard, Kubernetes.

What is Kubernetes?

I know, everyone is talking about it, hyping it up and the industry looks at it as the new standard in the DevOps world, but all of that is for a good reason. Simply put, Kubernetes is just a container orchestrator. If you’ve ever worked with Docker or any other container runtime, you might have an idea of what containers are, imagine them as environments inside of which your application is running in(more precisely an environment and the app packaged together), separated and isolated from the operating system(well, except for the kernel part). As for the orchestrator part, it’s exactly that, it specifies how the containers should be running and how they should interact.

Now it might be weird that there is a whole technology created by a giant like Google to run isolated applications and specify how they should talk to each other and one might think that this problem should be solved by the container runtime itself, but unfortunately that is not the case. The most popular container runtime Docker has their own solution, Docker Swarm(which we have been using) but the truth is that it often comes up short, and while it is simple, sometimes you need to sacrifice simplicity to solve problems.

Kubernetes vs Docker Swarm

While I can go into specific details about the differences, the main takeaway is just that Kubernetes provides a lot more control when it comes to pretty much everything, which in turn increases the complexity of the whole deployment process. Let’s check out a simple example for hosting a Next.js application with Swarm:

app:

networks:

- my_network

image: kodius/image

ports:

- "9000:3000"

restart: always

deploy:

replicas: 2

Okay, so this was really straightforward, now let’s check out the k8s solution:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: app

name: app-deployment

spec:

revisionHistoryLimit: 2

selector:

matchLabels:

app: app

template:

metadata:

labels:

app: app

spec:

containers:

- name: app

image: kodius/image

ports:

- containerPort: 3000And to achieve the same thing network wise we need a service:

apiVersion: v1

kind: Service

metadata:

name: app-service

spec:

selector:

app: app

ports:

- port: 3000

targetPort: 3000

nodePort: 9000

type: NodePortWoah, that was a lot. So naturally a question arises, why would one ever prefer the Kubernetes solution? Well, it provides a lot more control for one, and once up and running you gain much more insight into the health of the system. Not to mention the amount of control you get for each part of the configuration. For example there are 5 types of services available in Kubernetes, NodePort from the example was just one of them. To expose the application from Swarm, you just get

ports:

- "9000:3000"

Of course you can edit the whole network configuration to achieve something like a static IP LoadBalancer service but it gets messy really quickly (see https://stackoverflow.com/questions/39493490/provide-static-ip-to-docker-containers-via-docker-compose). Biggest reason for this is that Docker Swarm isn’t really made to be highly customizable and provide a plethora of options and if you ever bump into something that strays from the intended use, you will get stuck pretty quickly.

The biggest and simplest personal example I can provide was setting up zero time deployments. I wanted to avoid the dreaded 502 Nginx screen while our application was being redeployed.

So, let’s look at the Swarm solution:

deploy:

replicas: 1

update_config:

parallelism: 1

order: start-first

failure_action: rollback

delay: 20s

healthcheck:

test: "curl -sSf -X POST http://localhost:3000/healthcheck || exit 1"

interval: 10s

retries: 12

start_period: 20s

timeout: 10sThis is in case we are using a single replica for our application, and without going into specifics, we are pinging the health check endpoint every 10 seconds and updating the container image if it is up and running. It’s working, but honestly it feels clunky, a lot of manual configuration, performing the curl command, and nowhere it says that it was supposed to be utilized like this. On the other hand, Kubernetes has support for both liveness and readiness probes which were implemented for this specific use case. Firstly the liveness probes are responsible for killing of unresponsive pods(very similar to containers) while the readiness probes are used to let the service know if it should serve that pod or not(our zero time deployment magic). In combination this makes for a way cleaner solution than our initial Swarm solution. In practice it looks like the following:

readinessProbe:

httpGet:

path: /healthcheck

port: 3000

initialDelaySeconds: 20

Not to mention there are a number of settings and statuses specifically thought out just for this use case. Liveness probes are defined in a similar fashion, which makes this solution really elegant while the Swarm solution requires quite a bit of overcomplicated engineering to achieve.

I will stop here because there are a number of blog posts comparing the two into even more details. The main takeaway from this is that Kubernetes is much more sophisticated and provides better solutions for our needs and that is the reason we are making the switch. To further dive into this check out these:

https://www.ibm.com/blog/docker-swarm-vs-kubernetes-a-comparison/

https://circleci.com/blog/docker-swarm-vs-kubernetes/

Now let’s see how we are going to implement this.

Our solution

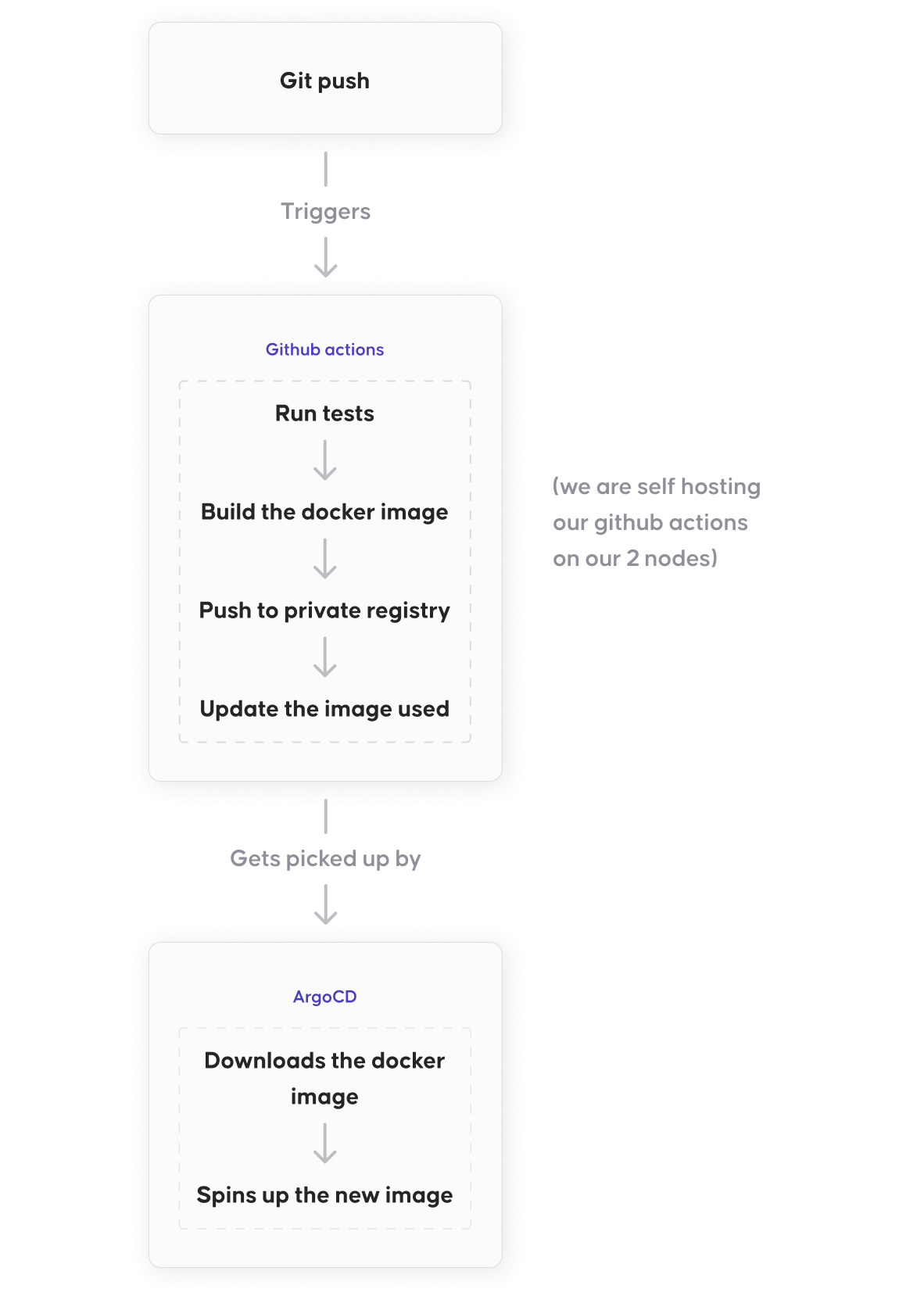

We want to be in control as much as possible, our planned pipeline looks like following:

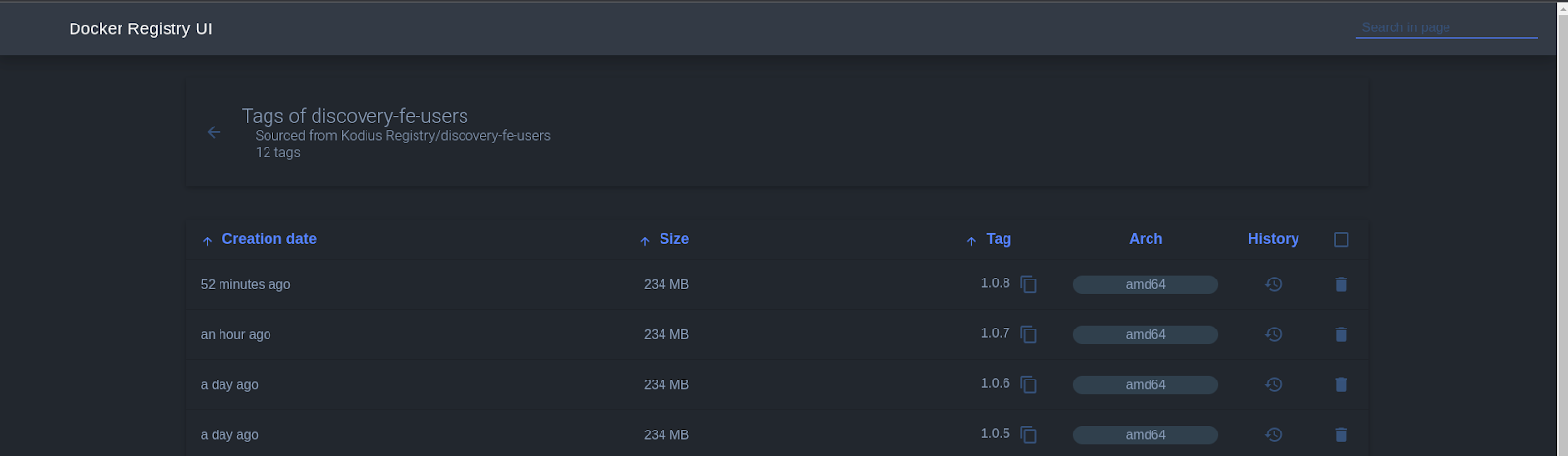

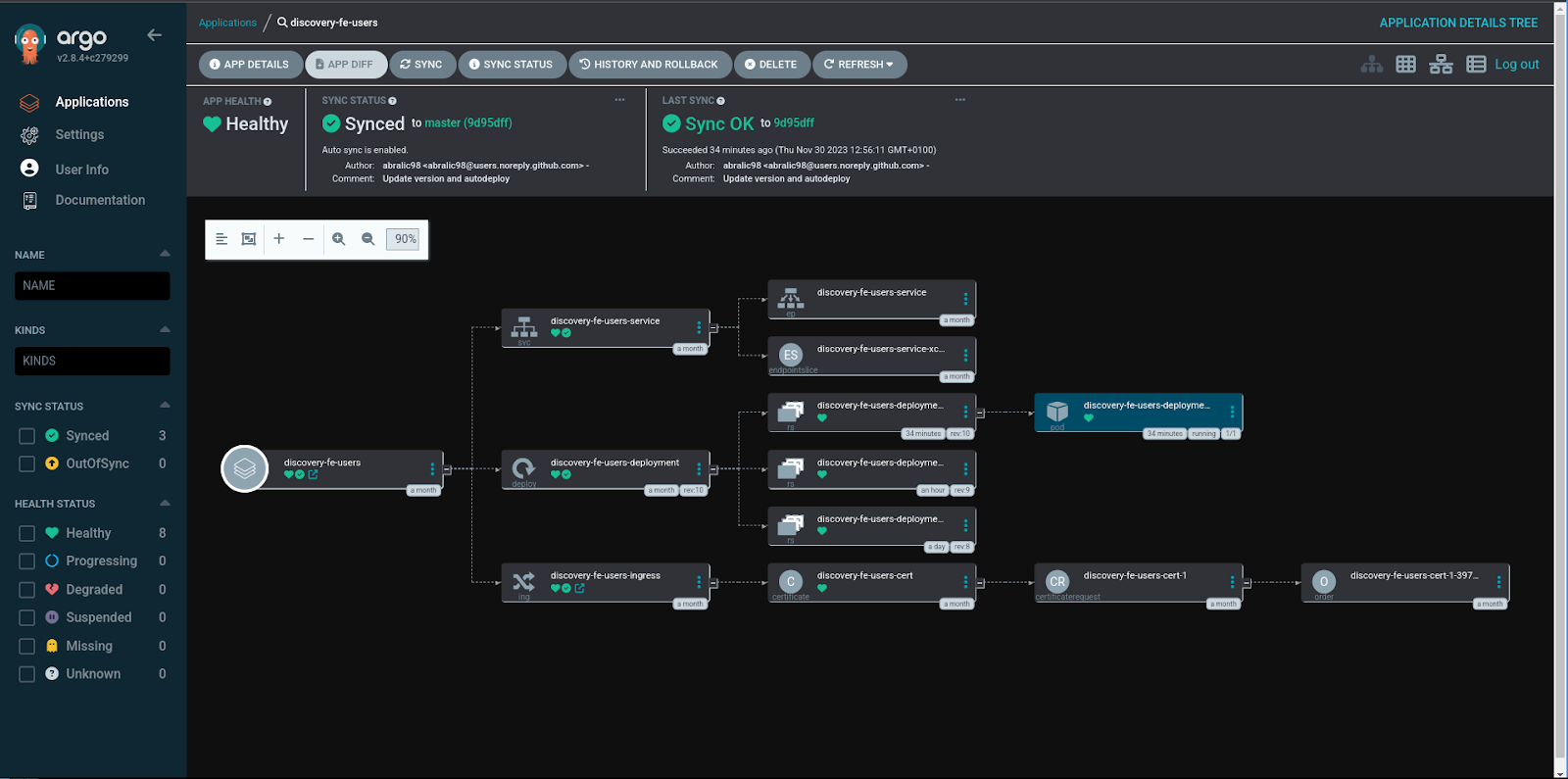

Two most important parts of our CI/CD will be Github actions and ArgoCD. We wanted to keep everything in our Github repositories as this setup allows us to fully utilize the GitOps philosophy. Our self hosted Github actions will be responsible for running our tests and building the docker images which will get pushed to our private docker image registry. Usually we would avoid having to use our storage for storing images, but docker slim cuts our size around 5 times, so it becomes really practical.

So we have our build step done and our images get pushed to our registry, great, now we’re going to run them. The Kubernetes manifest is written in a helm chart file which allows us to easily fill in the value for the image tag that is going to be deployed. After the image is pushed to our registry, we update the image tag being used to the new version. Argo then picks up that change in the helm chart and starts updating the deployment. It first creates a new replicaset and pulls the new image for it. After a while our readiness probe marks the newly created pod as ready and the service switches the traffic to the new replicaset. Only part where we could improve would be using the image updater but since we also build images from branches we want an option to deploy those images manually(only the images built from master get autodeployed), but your use case may vary.

Conclusion

This was an intro to our setup for Kubernetes, we plan on going more in depth regarding our choice to run applications on our own hardware rather than using cloud services(EKS, GKE, Openshift etc.) and providing more guides to specific elements of our pipeline, so stay tuned!